In our last blog we talked about the development of robots and their role in nursing homes. In this blog we tried to collect laws and regulations governing the use of robotics and artificial intelligence to interact with the elderly. According to data collected from ifr.org, The International Organization for Standardization defines a “service robot” as a robot “that performs useful tasks for humans or equipment excluding industrial automation applications”.

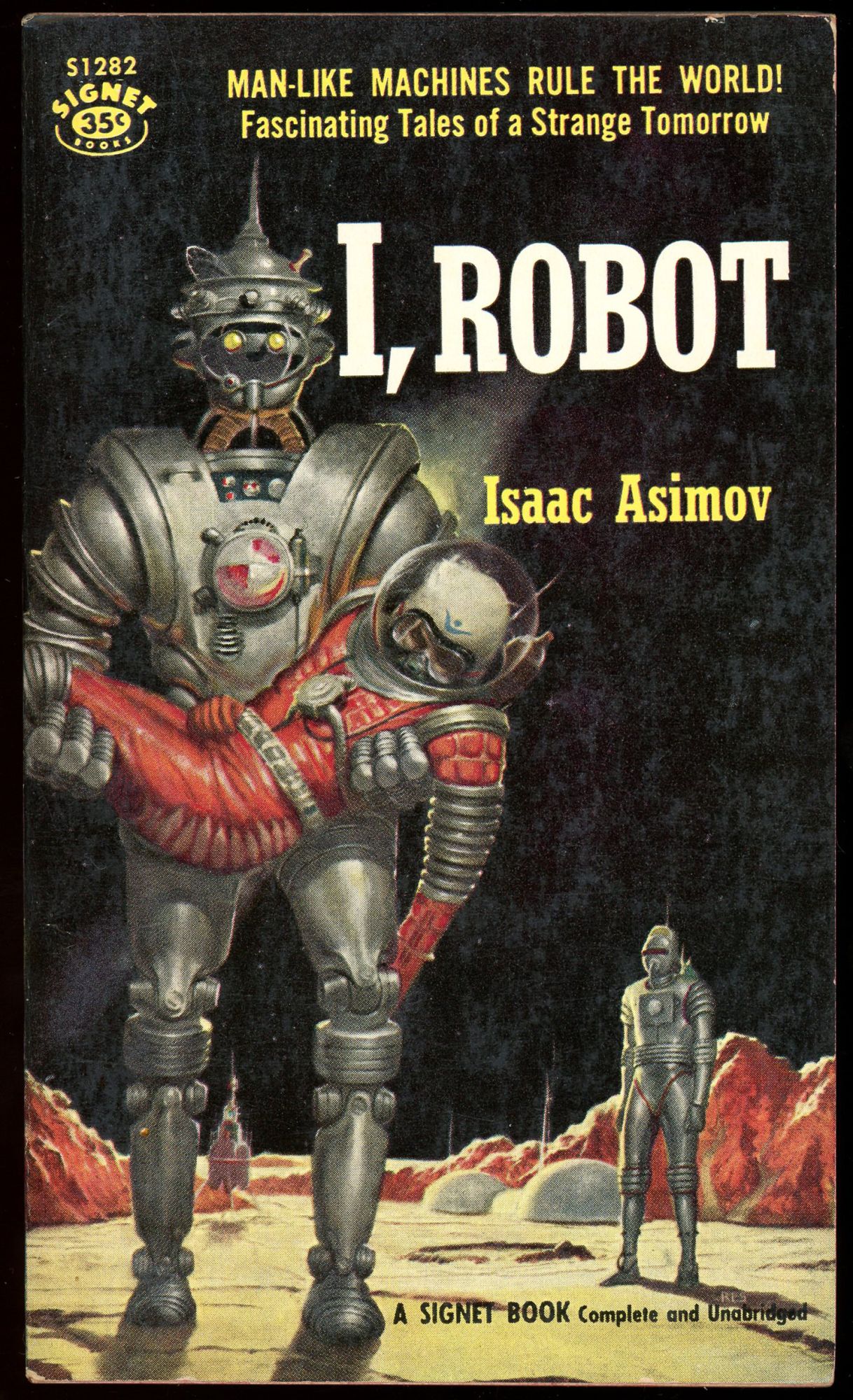

Isaac Asimov was a biochemistry professor who wrote more that 500 books and one of them is I, Robot that is one of the most influential science fiction Books.We take a look at the Three Laws of Robotics that was written in 1950 by Isaac Asimov in his robot stories.

1- A robot may not injure a human being, or, through inaction, allow a human being to come to harm.

2- A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

3- A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

In later books Asimov added forth law or zeroth law above Three other laws:

0- A robot may not harm humanity, or, by inaction, allow humanity to come to harm.

Although Asimov's Laws were a useful departure point, but they weren’t enough because they couldn’t stop robots from hurting people. According to data collected from HistoryofInformation.com, in 2011, five ethical "principles for designers, builders and users of robots" in the real world was published by the Engineering and Physical Sciences Research Council (EPRSC) and the Arts and Humanities Research Council (AHRC).

1. Robots should not be designed solely or primarily to kill or harm humans.

2. Humans, not robots, are responsible agents. Robots are tools designed to achieve human goals.

3. Robots should be designed in ways that assure their safety and security.

4. Robots are artifacts; they should not be designed to exploit vulnerable users by evoking an emotional response or dependency. It should always be possible to tell a robot from a human.

5. It should always be possible to find out who is legally responsible for a robot.

After that was so many Guidelines and principles published that helped the robots to have a useful interaction with elderly.

Universal Guidelines for Artificial Intelligence was released at the Public Voice event in Brussels on October 23, 2018.

1. Right to Transparency. All individuals have the right to know the basis of an AI decision that concerns them. This includes access to the factors, the logic, and techniques that produced the outcome.

2. Right to Human Determination. All individuals have the right to a final determination made by a person.

3. Identification Obligation. The institution responsible for an AI system must be made known to the public.

4. Fairness Obligation. Institutions must ensure that AI systems do not reflect unfair bias or make impermissible discriminatory decisions.

5. Assessment and Accountability Obligation. An AI system should be deployed only after an adequate evaluation of its purpose and objectives, its benefits, as well as its risks. Institutions must be responsible for decisions made by an AI system.

6. Accuracy, Reliability, and Validity Obligations. Institutions must ensure the accuracy, reliability, and validity of decisions.

7. Data Quality Obligation. Institutions must establish data provenance and, assure quality and relevance for the data input into algorithms.

8. Public Safety Obligation. Institutions must assess the public safety risks that arise from the deployment of AI systems that direct or control physical devices, and implement safety controls.

9. Cybersecurity Obligation. Institutions must secure AI systems against cybersecurity threats.

10. Prohibition on Secret Profiling. No institution shall establish or maintain a secret profiling system.

11. Prohibition on Unitary Scoring. No national government shall establish or maintain a general-purpose score on its citizens or residents.

12. Termination Obligation. An institution that has established an AI system has an affirmative obligation to terminate the system if human control of the system is no longer possible.